Imagine this scenario. The digital assets you’ve created as conversion points for your customers need improvement. You’re unsure what changes will generate positive outcomes. You could guess, and run the risk of breaking experiences that are working for you. Maybe you opt for an A/B testing methodologies, but discover the insights are limited, or the changes you make don’t yield the results you expect.

Sound familiar?

A/B testing can be a murky process, and false positive and false negative tests are widespread in the process:

- False positive: You reached stat sig, only to realize your experiment didn’t provide the lift you expected over time, decreasing your conversion rates.

- False negative: Your test appeared to be poorly performing but really, if you let the test run long enough, you’d find it was a winning idea, which also translates into missed conversion opportunities.

It’s also likely your ability to move forward with changes to your digital assets is restricted by resources, and when harding code changes on an experiment that’s a false positive, the resources offered by design, development, product, and marketing become sunken costs. They may also cause you to miss the mark on your revenue projections.

These outcomes can be scary, can lead to risk aversion, and can make it more challenging to get the buy-in from leadership to continue spending time on experimentation, and recommending more conservative approaches. When the experimentation culture shifts“best practices” or heuristics, it diminishes the point of experimentation: being innovative to gain a competitive advantage in the marketplace.

So how do you mitigate the downside and maximize the upside in your experimentation strategy?

The answer is simple. Evolve your process.

Understanding Active Learning for Experimentation

Experimentation technology has evolved over time, progressing from A/B testing to more sophisticated methods like continuous intelligence. Active learning represents the latest advancement, distinguished by its revolutionary departure from its predecessors.

Generation one: CRO tools

CRO tools like Optimizely are a variation of traditional, manual A/B tests. These tools automate processes but often leverage frequentist methodologies for experimentation. The challenge with a frequentist methodology is that its motto is: “Boom, we hit our mark. From this day forward, this is the answer.” And that mark, also known as stat sig, isn’t always correct due to peeking, a common problem with frequentists experimentation, which can cause false positive or false negative errors.

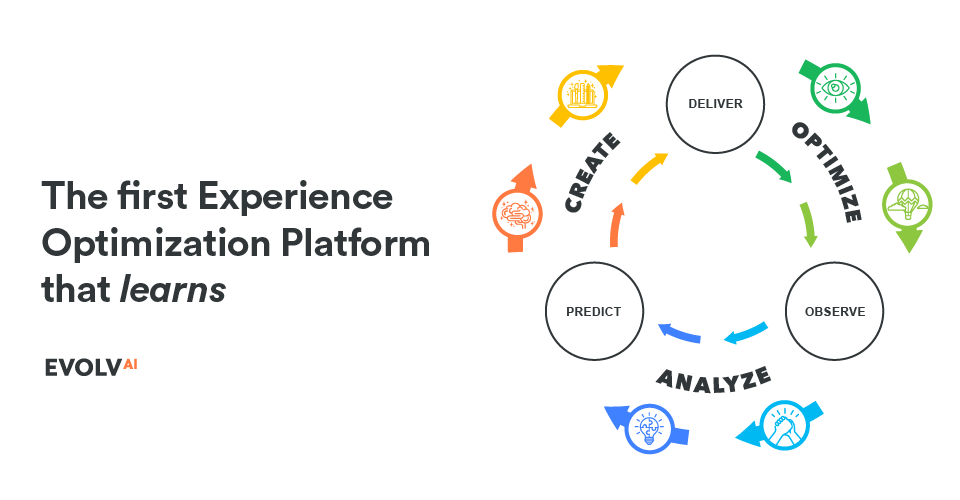

Generation two: continuous optimization

With continuous optimization, a person implements a change they want to make on their digital assets, a sequence of optimization processes occur using the Bayesian methodology for experimentation, and observations begin to arise. The end-user assesses the observations and creates new testing ideas from the findings. The user then feeds the changes into the machine, and the process starts again. The prediction and outcomes are more reliable than CRO tools.

Generation three: active learning

With active learning, you can decrease the time required to analyze insights and develop new ideas. Active learning uses historical insights and causal inference across all user data to connect users to the best possible experiences and to generate new ideas for further optimization. Generative AI as a component of active learning makes it possible to iterate elements of your landing pages, product pages, check-out pages, and other digital assets, on the fly as new ideas surface.

Putting Active Learning into Action

Let’s say you have a great hypothesis. Great, feed it into the system. Feed as many ideas into the system as you want. The core of Evolv AI’s product, from day one, has been rooted in a Bayesian, multivariate approach for experimentation designed to let you continuously test multiple ideas simultaneously.

Evolv AI’s predictive AI watches what’s happening across your experiments, variants, and user traffic and categorizes the changes made within the system, generalizes them, and automatically creates new ideas for your team to experiment with. Recommendations are force-ranked, starting with those measured to provide the highest impact and lowest level of effort for your consideration. This process makes it easier for the end-user to gather and validate insights creating a system that enables your team to learn from experimentation mistakes before they make them.

Once you select which experiments and variants you want to apply to production, you can use Evolv AI’s generative AI features to create code, copy, and images to bring your experiments to life with agility and speed. The efficiency of generative AI is a game-changer. Rather than spending time retraining models or slowing your pace of experimentation, anyone on your team can simply adjust their prompts and set new tests live, quickly.

Active learning augments human creativity throughout the design thinking and experimentation process, giving your team the most brilliant user experience leader in the world.

Want to see how an active learning system can work for you? Book time to speak with someone on our team and learn how to optimize your experimentation needs with Evolv AI.